Shun

状元

Hi all,

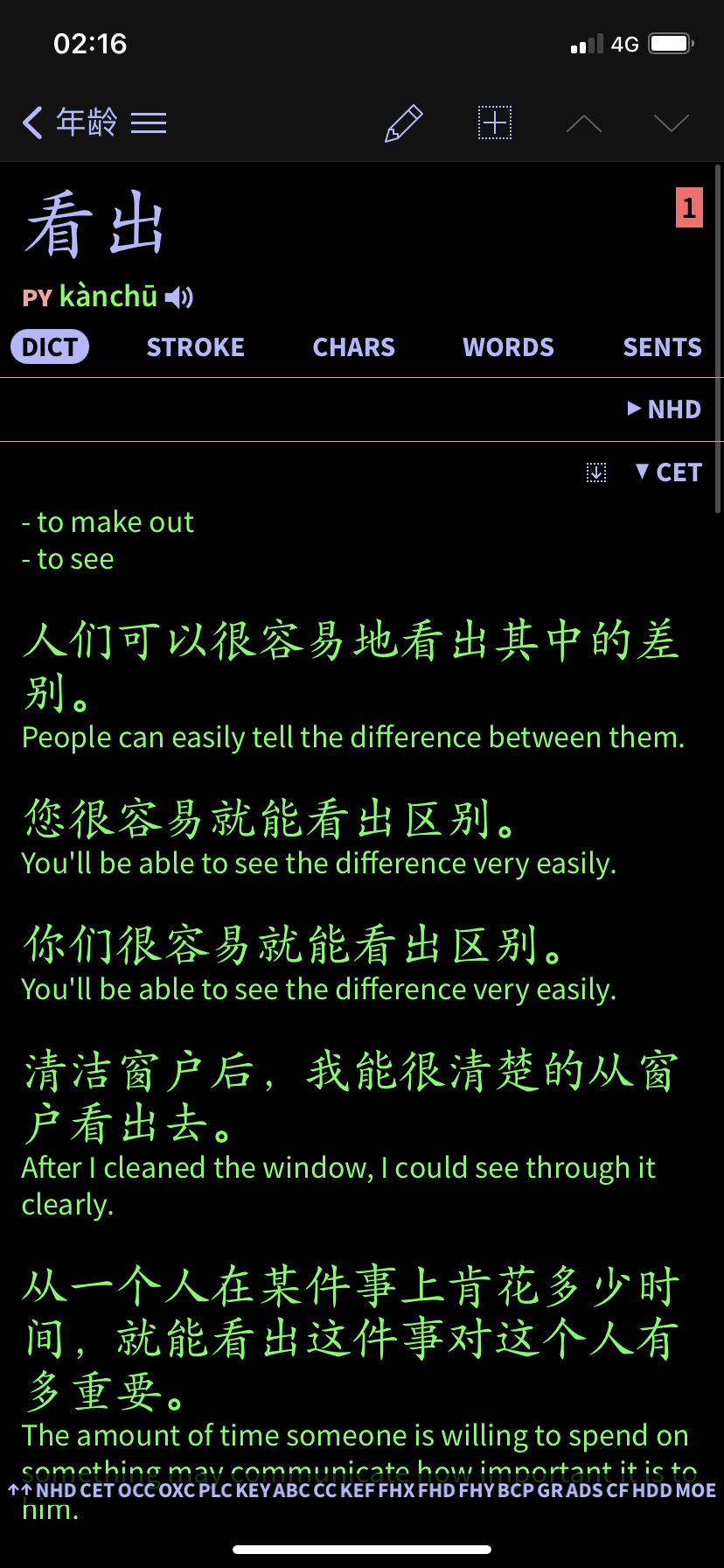

I have created a user dictionary that includes up to fifteen Chinese-English Tatoeba example sentences, where available, with each of the about 118,000 CC-CEDICT dictionary entries. Here's an excerpt from a dictionary entry:

The zipped user dictionary (45 MB) and the text file (9.8 MB) can be downloaded through this link:

drive.google.com

drive.google.com

One can get something very similar by visiting Tatoeba.org and entering a Chinese expression, though it surely is a lot more comfortable to have these sentences right inside Pleco.

@Natasha 's question inspired me to do this. It is quicker to access example sentences like this than through the Search feature in Organize Flashcards.

Attribution: I used data from CC-CEDICT and Tatoeba.org for this dictionary. I attach the Python script I did it with. Feedback is welcomed.

Enjoy,

Shun

I have created a user dictionary that includes up to fifteen Chinese-English Tatoeba example sentences, where available, with each of the about 118,000 CC-CEDICT dictionary entries. Here's an excerpt from a dictionary entry:

The zipped user dictionary (45 MB) and the text file (9.8 MB) can be downloaded through this link:

CC-CEDICT with Tatoeba Sentences - Google Drive

drive.google.com

drive.google.com

One can get something very similar by visiting Tatoeba.org and entering a Chinese expression, though it surely is a lot more comfortable to have these sentences right inside Pleco.

@Natasha 's question inspired me to do this. It is quicker to access example sentences like this than through the Search feature in Organize Flashcards.

Attribution: I used data from CC-CEDICT and Tatoeba.org for this dictionary. I attach the Python script I did it with. Feedback is welcomed.

Enjoy,

Shun